Important links:

Code: https://glitch.com/edit/#!/asl-comunicator

Live Site: https://asl-comunicator.glitch.me

Background and Intent

As a hard-of-hearing individual, working from home has been equally awesome and frustrating. Awesome because new communications tools have made collaborative work easier in the internet age. Frustrating because the draw back of sharing a home as a work space means less privacy and potentially awkward Zoom bombs and other interferences by household members. This project draws inspiration from these awkward situations and aims to create an alternative solution to avoiding Zoom bombs.

By having the webpage visible, couples who work from home but rather not be distracted by more formal modes of communication, can send a quick message to each other from time to time. Its is a simple idea with a lot of potential.

Scaling the technical interactions explored in this script can expand potential functionality and case usage in areas such as: learning ASL, deaf communication, accessible painting without tools, etc.

After doing several experiments in PoseNet, I felt pretty confident in utilizing ml5 and other pose estimation tools and wanted to continue exploring these libraries. My user story goals were pretty simple:

- Be able to launch on any screen with a connected camera

- Control cursor movement with fingers

- Send textual messages through hand/ASL signing

- Interact with any other connected user

Back-end

While ML5’s PoseNet provides a great way to track full-body poses, it does not track individual hand movements. After some digging around Google Github page, I came across MediaPipe Hands. This library offers keypoint tracking of individual fingers of one hand at a time.

In order for me to use this library, I needed to include it, along with the following in my package.json file:

"@tensorflow-models/handpose": "^0.0.7",

"@tensorflow/tfjs-backend-wasm": "^3.9.0",

"@tensorflow/tfjs-backend-webgl": "^3.11.0",

"@tensorflow/tfjs-converter": "^3.11.0",

"@tensorflow/tfjs-core": "^3.11.0",I also wanted to make this a participatory interaction and added the socket.io library to facilitate real time communication between the app users. I used Express and Node.js as the basis for the server. I also included a socket.io listener.

Front-end

I did not place a major emphasis on the visual design of the solution as it was a very rough prototype. My intentions were mainly to test a hypothesis through technical iteration. I broke my project down to three general steps:

- Create the necessary objects

- Create paint and text interactions

- Send these interactions over the server to other users

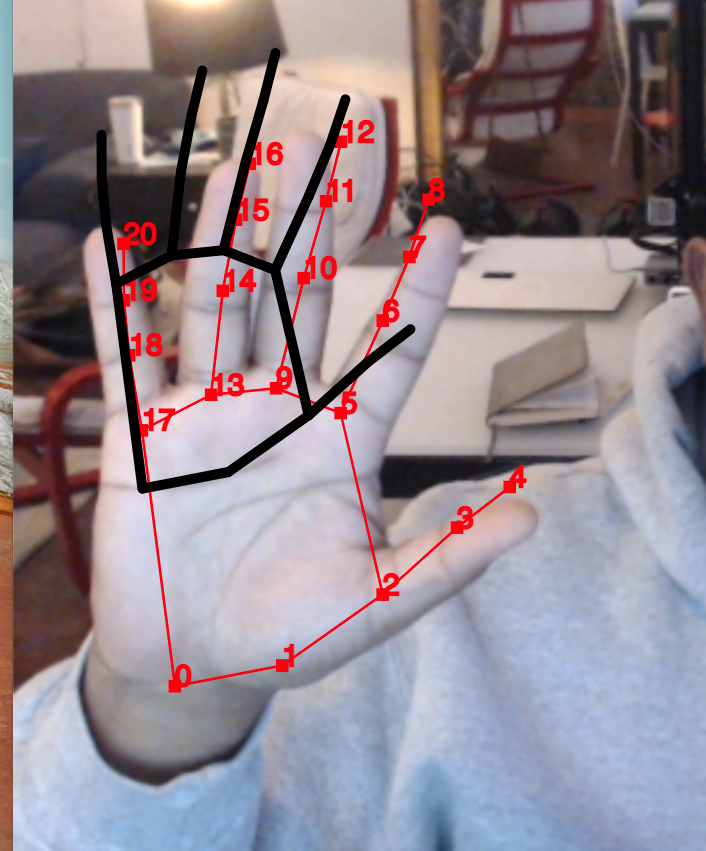

I found that the best way to accomplish this would be via p5.js, socket.io and a simple static webpage. The webpage deployed easy and creating the base objects in the sketch was straightforward. Early in the design, I used Google’s documentation to create a simple keypoint skeleton.

This proved to be infinitely useful as I was able to see my hand movements throughout development in order to identify errors and make considerations when designing the appropriate hand response. I also added a a random color generator and paint tracker.

There are only three recognized words and associated actions in this version.

“Hi” when an open palm is waved.

“Sure” when a thumbs up is given.

“Cool!” when the little finger and thumb are above the middle finder.

I considered creating a neDB database of the text responses, but I was running out of time. Knowing my library of hand signs would be very limited, I opted to create individual variables. In retrospect, I could have cleaned my script up to be more efficient, but this is fine for maximum flexibility.

With the base code implemented, I started defining rules for each word in my library of responses. Using finger landmarks, which I took a screen shot of, I created some logical arguments.

//if the y point for landmark 16 (tip of ring finger) is higher than the tip of the thumb, print "HI"

if (landmarks[16][1] < landmarks[4][1] ) {

printText();

};

//if the y point for landmark 4 (tip of thumb finger), and landmark 20 (tip of little finger) is higher than the tip of middle finger, print "Cool"

if (landmarks[4][1]<landmarks[12][1] && landmarks[20][1]<landmarks[12][1]) {

printText2();

};

//if the y point for landmark 8 (tip of index finger) is under thumb, and landmark 8 (tip of index finger) is higher than landmark 20 (tip of little finger), print "Sure"

if (landmarks[8][1]>landmarks[4][1] && landmarks[8][1]<landmarks[20][1]) {

printText3();

};These rules worked pretty well as a limited library and the responses are very fast.

Next, I needed a way to assemble and emit this data. I created an object, data, to hold socket data, and shared it on the server. The responses were printed and shared with all viewers of the webpage. To make the interactions more fluid I used an easing script from P5.js’ reference library to smooth the movements and included a 30 frames per second rate to add stability. The interaction, while simple, produced fantastic new modes of interactions that can be customized for all ages, cultures, and languages.

I think my biggest stumbles in this project was getting the sockets to work. I spend so much time debugging my script that I lost critical time that should have been dedicated to designing out the experience. I’m still extremely pleased at the resulting interaction and excited to continue fine-tuning it.

Next Steps

I image an interface like this can be scalable and lead to many creative uses. I have a passion project to design home and childcare IoT accessories around this general application and might attempt to explore it in my Final with sensors.